[ad_1]

(Initially revealed by Stanford Human-Centered Synthetic Intelligence on February 21, 2024)

The U.S. authorities has made swift progress and broadened transparency, however that momentum must be maintained for the subsequent looming deadlines.

January 28, 2024 was a milestone in U.S. efforts to harness and govern AI. It marked 90 days since President Biden signed Government Order 14110 on the Protected, Safe, and Reliable Improvement and Use of Synthetic Intelligence (“the AI EO”). The federal government is shifting swiftly on implementation with a degree of transparency not seen for prior AI-related EOs. A White Home Truth Sheet paperwork actions made to this point, a welcome departure from prior opacity on implementation. The scope and breadth of the federal authorities’s progress on the AI EO is successful for the Biden administration and america, and it additionally demonstrates the significance of our analysis on the necessity for management, readability, and accountability.

Right this moment, we announce the primary replace of our Protected, Safe, and Reliable AI EO Tracker —a detailed, line item-level tracker created to comply with the federal authorities’s implementation of the AI EO’s 150 necessities.

The White Home boasts 21 necessities within the first stage of the AI EO had been “accomplished,” suggesting a one hundred pc success fee. We had been solely in a position to conclusively confirm 19 necessities as having been totally carried out or proven substantive progress, suggesting a 90 p.c success fee for first-stage “accomplished” necessities of the AI EO which are actually clear to the general public.

As we defined in our preliminary analyses, the EO is a daring and pressing effort to rally AI motion throughout the federal authorities. Nonetheless, its success hinges on well timed and clear implementation by federal entities. Our overview of publicly accessible data discovered that there are nonetheless noticeable gaps within the public disclosure of some AI EO necessities. Though the sensitivity of some necessities warrant much less disclosure (e.g., disclosures elevating cybersecurity or nationwide safety issues), public verifiability stays a difficulty with different necessities.

How We Monitor Implementation

Our up to date tracker offers data on the implementation standing of 39 necessities primarily based on 1) the White Home’s Truth Sheet; 2) bulletins, or public statements, made by the accountable federal entities or officers; and three) official paperwork, media stories, and different conclusive proof concerning particular line objects publicly accessible as of February 8, 2024.

Necessities are marked “carried out” if there’s adequate public proof of full implementation (e.g., official bulletins, impartial reporting) separate from the Truth Sheet. For instance, part 5.2(a)(i)’s requirement that the Nationwide Science Basis (NSF) launch a pilot of the Nationwide AI Analysis Useful resource (NAIRR) is verified not simply by the Truth Sheet, but in addition by the launch of the NAIRR pilot web site and main bulletins by NSF, company companions, and non-government companions. We marked necessities as “not verifiably carried out” if we couldn’t discover conclusive proof of full implementation, akin to part 8(c)(i) necessities on AI in transportation. A requirement is deemed “in progress” if it has been accomplished solely partially, the extent of progress is ambiguous, or full completion of the requirement necessitates ongoing motion. For instance, whereas the Division of State’s pilot program to conduct visa renewal interviews inside america satisfies part 5.1(a)(i), we marked this requirement as in progress as a result of the motion doesn’t totally fulfill 5.1(a)(ii), which requires the continued availability of adequate visa appointments for AI consultants.

Spectacular Enhancements on Transparency

The federal authorities has been admirably clear over the previous three months about its implementation efforts associated to the AI EO. The White Home Truth Sheet, revealed on the 90-day milestone, collates an inventory of company actions which have been accomplished, alongside their prescribed deadlines. Federal companies have additionally issued well timed statements concerning their actions. For instance, the Nationwide Institute of Requirements and Know-how (NIST), created a devoted net web page that outlines the company’s obligations, deadlines, and information associated to AI EO implementation, and Secretary of Commerce Gina Raimondo has made a number of bulletins on EO-related actions.

These proactive disclosures are a major enchancment on earlier authorities efforts to implement AI-related EOs, which prior analysis by Stanford HAI and the RegLab has discovered to be inconsistent and poorly disclosed. Final yr, Congress responded to the criticisms raised by this analysis along side elevated media consideration by holding hearings and requesting common updates from companies concerning their AI governance initiatives.

The Administration appears to have taken these classes to coronary heart. The AI EO consists of a lot clearer definitions of duties, assigned obligations, timelines, and reporting necessities. And public accountability mechanisms seem to have spurred efforts to fill the management vacuum—each on the White Home and at companies—and deal with useful resource shortages within the federal authorities’s AI coverage equipment.

Progress Thus Far

In simply 90 days, the chief department has made critical progress. In accordance with the White Home, federal companies have accomplished 29 actions in response to the AI EO, which we mapped in opposition to 31 distinct necessities in our tracker. Of those, 21 necessities had been due on or earlier than the 90-day deadline.

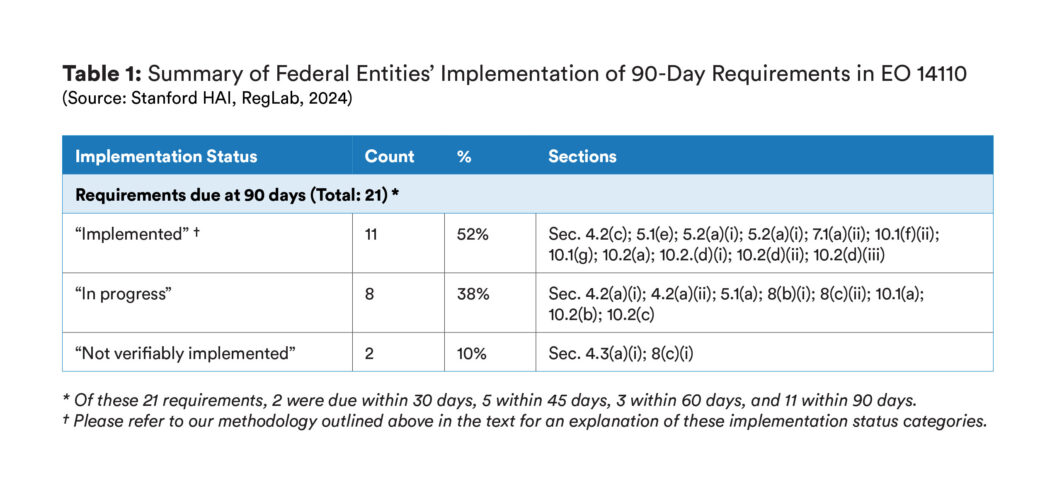

We confirmed implementation progress for 19 of these 21 necessities (90 p.c): We verified 11 of them (52 p.c) as totally carried out and eight of them (38 p.c) as in progress (see methodology above). For 2 necessities (10 p.c), we couldn’t discover distinct, conclusive proof to verify the White Home’s declare of full implementation (see dialogue of those necessities in Areas for Enchancment beneath).

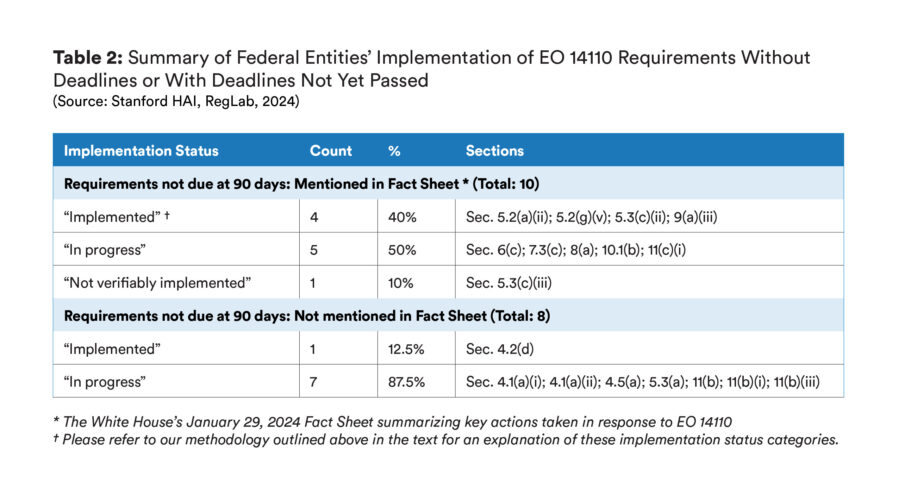

The White Home Truth Sheet additionally references 10 further necessities that shouldn’t have deadlines or have deadlines that haven’t but handed. We verified 4 of those necessities as totally carried out and 5 necessities as in progress, whereas one requirement couldn’t be verified as carried out or in progress. Contemplating that many of those duties will not be due for a number of months, this exhibits spectacular early progress. As well as, we famous early implementation efforts associated to eight further necessities that weren’t even referenced within the Truth Sheet.

Of word, the Nationwide Science Basis (NSF) formally launched its pilot of the Nationwide AI Analysis Useful resource (NAIRR) in partnership with 10 federal companies and 25 non-governmental companions. As a part of the launch, the NSF and the Division of Vitality issued an early name for requests to entry superior computing assets. This pilot is a historic first step towards leveling the enjoying subject for equipping researchers with much-needed compute and information assets, thereby strengthening long-term U.S. management in AI analysis and innovation.

The federal authorities’s launch of an AI expertise surge is one other important step on a difficulty many suppose will be the largest obstacle to accountable AI innovation in authorities. Past prioritizing AI specialists by the U.S. Digital Service and Presidential Innovation Fellows, federal companies just like the Division of Homeland Safety are pursuing new AI recruitment and expertise initiatives. For instance, the Workplace of Personnel Administration has approved government-wide direct rent authority for AI specialists and launched a pooled hiring motion for information scientists. The total scale of the AI hiring surge, nonetheless, stays unknown (thus we deemed it to nonetheless be in progress) and is essential given the acute wants for technical expertise in authorities.

As well as, many companies have taken first steps to mobilize assets that can permit them to implement extra substantive rule-making necessities within the coming months, together with establishing two new job forces and publishing seven requests for data or feedback.

Areas for Enchancment

Regardless of substantial enhancements over the implementation of earlier AI-related EOs, there are nonetheless gaps. White Home and company reporting on implementation varies drastically when it comes to the extent of element and accessibility. Exterior observers might discover it troublesome to independently confirm claims that exact necessities have been accomplished.

There are a variety of cheap rationales that counsel in opposition to full transparency in implementation, together with issues associated to nationwide safety and cybersecurity in addition to the tight time constraints imposed by the AI EO. For instance, the Division of Homeland Safety didn’t launch detailed data pertaining to company assessments of the danger posed by AI use in important infrastructure programs required beneath part 4.3(a)(i); nonetheless, full disclosure of those danger assessments or associated data may pose nationwide safety dangers. Even the disclosure of which companies haven’t accomplished their danger assessments might be thought-about delicate data if it signifies an company’s lack of readiness. Equally, the Division of Commerce didn’t reveal the small print of its interactions with, or data supplied by, corporations concerning dual-use basis mannequin reporting beneath the Protection Manufacturing Act (DPA), pursuant to sections 4.2(a) of the AI EO. Regardless of the unfinished data, there are adequate public statements and impartial reporting for us to deem the DPA-related necessities as in progress.

However in different situations the place public reporting of implementation particulars shouldn’t implicate safety issues, public reporting stays incomplete. In these circumstances, publicly accessible data consists solely of high-level statements from the White Home and/or company heads, with out further formal bulletins or statements on the related entity’s web site. For example, we couldn’t discover particular data past the Truth Sheet to help the declare that the Division of Transportation, as a part of its implementation of part 8(c), established a brand new Cross-Modal Government Working Group or directed a number of councils and committees to offer recommendation on the secure and accountable use of AI in transportation. And whereas a senior official on the Division of Well being and Human Companies (HHS) famous in Congressional testimony that HHS launched the department-wide AI job drive required beneath part 8(b)(i), extra data on job drive actions shouldn’t be publicly accessible. Different instances, data could also be publicly accessible however troublesome to seek out. Whereas many companies have devoted AI-related net pages, some websites are hardly ever refreshed and haven’t been up to date in years.

A centralized useful resource that particulars all authorities motion on AI coverage may higher facilitate accountability, public engagement, and suggestions. For earlier AI-related EOs, AI.gov served as a repository of data on AI coverage actions throughout companies. Nonetheless, as this web site has largely been repurposed to promote the AI expertise surge and collate public feedback on draft guidelines, this repository operate has been eliminated.

Wanting Forward

Progress within the first 90 days following the AI EO demonstrates that federal officers have mobilized appreciable assets to make sure swift implementation and transparency—serving to make the White Home’s efforts to guide in AI innovation and governance a actuality.

Nonetheless, momentum should be maintained—and even elevated— for the remaining 129 necessities. One other 48 deadlines face companies inside the subsequent 90 days. Although companies have already made progress on a few of these duties forward of time, the mandated actions are important. Notable necessities embody a program to mitigate AI-related mental property danger, pointers for using generative AI by federal staff, and an analysis of AI programs’ biosecurity threats. As many submission home windows for requests for data shut, companies may also need to digest and translate huge quantities of data into guidelines and steerage. Notably, the Workplace of Administration and Price range might want to finalize its steerage on the federal authorities’s use of AI. In the meantime, lots of the necessities the White Home has claimed as already carried out would require ongoing consideration to realize the EO’s meant outcomes.

We commend the White Home and federal companies on their transparency in documenting implementation progress. Our efforts to independently confirm implementation with publicly accessible data spotlight that there stays room for the federal government to offer much more detailed and structured data. Additionally they reinforce the worth of impartial monitoring initiatives and different public accountability mechanisms—each of which we’ll proceed to contribute to.

Authors: Caroline Meinhardt is the coverage analysis supervisor at Stanford HAI. Kevin Klyman is a analysis assistant at Stanford HAI and an MA candidate at Stanford College. Hamzah Daud is a analysis assistant at Stanford HAI and an MA candidate at Stanford College. Christie M. Lawrence is a concurrent JD/MPP pupil at Stanford Regulation Faculty and the Harvard Kennedy Faculty. Rohini Kosoglu is a coverage fellow at Stanford HAI. Daniel Zhang is the senior supervisor for coverage initiatives at Stanford HAI. Daniel E. Ho is the William Benjamin Scott and Luna M. Scott Professor of Regulation, Professor of Political Science, Professor of Laptop Science (by courtesy), Senior Fellow at HAI, Senior Fellow at SIEPR, and Director of the RegLab at Stanford College.

[ad_2]

Supply hyperlink